6. Major tech companies exert a great deal of influence

The issue above ties in with the power of major tech companies, namely Facebook, Microsoft, Google, Apple, Alibaba, Tencent, Baidu and Amazon. These eight tech companies have the financial capacity, the data and the intellectual ability to raise the quality of artificial intelligence enormously. The risk therefore exists that very powerful technology ends up in the hands of a relatively small group of commercial (!) companies. And the better the technology, the more people will start using it, the more effective the technology becomes, et cetera. This will give the big boys an ever greater advantage. The winner-takes-all mechanism of the Internet era also applies to data (data monopolies) and algorithms.

Also, the transfer of algorithms in so-called ‘transfer learning’ is becoming more and more effective. In this case, increasingly less data will be required for a good result. A system from Google, for example, had been offering quality translations from English to Spanish and Portuguese for a period of time. With the help of new transfer learning techniques, this system is now able to translate from Spanish to Portuguese and vice versa with very limited input. The major tech companies own the data and these transfer learning models.

Experience shows that the previously mentioned commercial objective will always dominate, and it remains to be seen how these companies will use the technology in the future.

7. Artificial superintelligence

I personally believe the debate about the drawbacks of artificial intelligence is dominated a little too often by the discussion on superintelligence. The latter refers to systems with an intelligence that far surpasses human intelligence in several respects. As a result, they are able to acquire all manner of skills and expertise without human intervention, they can train themselves for situations unknown to them and are able to understand context. They are a kind of super intelligent oracle that only regards human beings as ‘snails in the garden’: as long as they do not bother you, they are allowed to live.

First of all, I believe we should take this scenario seriously and we should primarily focus on conveying our ethical morals to intelligent systems. In other words, we should not teach them rules set in stone, but something about human considerations. This is very important. It would be best if we provided them with a ‘conscience’, otherwise they would become anti-social personalities. What I intend to say is that I do not believe we will see even the slightest hint of a consciousness in these systems in the short term. The technology is far too young for this – if it is possible at all to create consciousness in these systems. It is therefore perfectly fine to reflect on it and we should really take superintelligence seriously, but there are certainly other risks at issue now.

Lest I forget, I would like to add a nuance here. I am not that concerned about a system endowed with any form of consciousness that will take over the world. But… we will probably be affected far more often by systems programmed with a certain objective that they intend to pursue relentlessly, without taking into account issues we consider to be important as humans, including empathy and social equality. Simply because they are programmed this way. Because what we consider to be important is their blind spot. And that is NOT science fiction.

Facebook is a ‘great’ example of how an artificial intelligence system can have a completely negative result. Indeed, increasingly smart Facebook algorithms only have one goal: keeping you on the platform for as long as possible. Creating maximum involvement with the content. Collecting clicks and reactions. But the system is insensitive to issues such as ‘the objective factual representation of matters’. Truth is unimportant because the system is only interested in the time you spend on the platform. Facebook does not care about the truth, with all the harmful consequences this entails.

8. Impact on the labour market

AI will create pressure on the labour market in the years ahead. The rapid increase in the quality of artificial intelligence will ensure smart systems become far more adept at specific tasks. The recognition of patterns in vast amounts of data, the provision of specific insights and the performance of cognitive tasks will be taken over by smart AI systems. Professionals should closely monitor the development of artificial intelligence because systems are increasingly able to look, listen, speak, analyse, read and create content.

There are therefore certainly people with jobs in the danger zone who will quickly have to adapt. However, the vast majority of the population will work with artificial intelligence systems. And remember: many more new jobs will be created, although it is more difficult to conceive of them than the jobs that get lost. Social inequality will increase in the years ahead as a result of the divide between the haves and the have-nots. I believe we as a society will have to look after the have-nots – the people who are only able to perform routine-based manual work or brainwork. We should remember that a job is more than just the salary at the end of the month. It offers a daytime pursuit, a purpose, an identity, status and a role in society. What we want to prevent is that a group of people emerge in our society who are paid and treated as robots.

In short, it is becoming increasingly important for professionals to adapt to the rapidly changing work environment. Moore’s law ensures there will be an ever greater distance between humans and machines.

9. Autonomous weapons

As recent as this summer, Elon Musk from Tesla warned the United Nations about autonomous weapons, controlled by artificial intelligence. Along with 115 other experts, he pointed to the potential threat of autonomous war equipment. This makes sense: it concerns powerful tools that could cause a great deal of damage. It is not just real military equipment that is dangerous: considering technology is becoming increasingly easy, inexpensive and user-friendly, it will become available to everyone… including those who intend to do harm. One thousand dollars will buy you a high-quality drone with a camera. A whizz-kid could subsequently install software on it which will enable the drone to fly autonomously. Artificial intelligence facial recognition software is available as early as now, which enables the drone camera to recognise faces and track a specific person. And what if the system itself starts making decisions about life and death, as is the case now in warzones? Should we leave this to algorithms?

And it is only a matter of time before the first autonomous drone with facial recognition as well as a 3D-printed rifle, pistol or other gun becomes available. Check this video from Slaughterbots to get an idea of this. Artificial intelligence makes this possible.

10. Everything becomes unreliable – e.g. fake news and filter bubbles

Smart systems are becoming increasingly capable of creating content – they can create faces, compose texts, produce tweets, manipulate images, clone voices and engage in smart advertising.

AI systems can turn winter into summer and day into night. They are able to create highly realistic faces of people who have never existed.

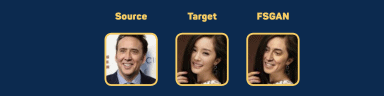

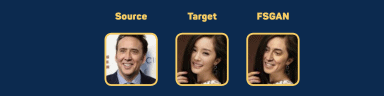

Open source software Deepfake is able to stick pictures of faces on moving video footage. This therefore makes it seem on video as though you are doing something (which is not true and has not actually happened). (read my report on deepfakes here (Dutch)). Celebrities are already being affected by this because those with malicious intent can easily create pornographic videos starring these celebrities. Once this technology becomes slightly more user-friendly, it will be child’s play to blackmail an arbitrary individual. You could take a photo of anyone and make it into rancid porn. One e-mail would then be enough: “Dear XYZ, in the attached video file you play the starring role. In addition, I have downloaded the names and data of all your 1,421 LinkedIn connections and I would be able to mail them this file. Transfer 5 bitcoins to the address below if you want to prevent this.” This is known as Faceswap video blackmailing.

Artificial intelligence systems that create fake content also entail the risk of manipulation and conditioning by companies and governments. In this scenario, content can be produced at such speed and on such a scale that opinions are influenced and fake news is hurled into the world with sheer force – specifically targeted at people who are vulnerable to it. Manipulation, framing, controlling and influencing. Computational propaganda. These practices are reality now, as we have seen in the case surrounding Cambridge Analytica, the company that managed to gain access to data from 87 million Facebook profiles of Americans, using this data for a corrupt (fear-spreading) campaign to get President Trump in power. Companies and governments with bad intentions have a powerful tool in their hands with artificial intelligence.

What if a video surfaces featuring an Israeli general who says something about wiping out the Palestinians with background images of what seems to be waterboarding? What if we are shown videos of Russian missiles being dropped on Syrian cities accompanied by a voice recording of President Putin casually talking about genocide? Powder keg-> fuse-> spark-> explosion.

Please have a brief look at this fake video of Richard Nixon in order to gain an accurate impression of this.

And how do we prevent social media algorithms from providing us with ‘tailor-made’ services to an ever greater extent, thereby reinforcing our own opinions in a well where the echoes reverberate ever more strongly? How do we avoid a situation where various groups in society live more and more in their own filter bubble of ‘being right’? This allows for the creation of a growing number of individual filter bubbles on a massive scale, resulting in a great deal of social unrest.

Book Jarno as keynote speaker

And what should we think when it comes to ‘voice cloning’? It is now possible to simulate somebody’s voice with the help of software, even though the result is not yet perfect. However, the quality is improving all the time. Identity fraud and cybercrime are lurking risks. Criminals will have voicemail messages recorded by software with self-directed payment orders. This is social engineering (the use of deception to manipulate individuals into disclosing confidential or personal information that can be used for fraudulent purposes) through fake voice cloning cybercrime. And it’s a reality.

11. Hacking algorithms

Artificial intelligence systems are becoming ever smarter and before long they will be able to distribute malware and ransomware at great speed and on a massive scale. In addition, they are becoming increasingly adept at hacking systems and cracking encryption and security, such as was recently the case with the Captcha key. We will have to take a critical look at our current encryption methods, especially when the power of artificial intelligence starts increasing even more. Ransomware-as-a-service is constantly improving as a result of artificial intelligence. Other computer viruses too are becoming increasingly smart by trial and error.

For example, a self-driving car is software on wheels. It is connected to the Internet and could therefore be hacked (this has already happened). This means that a lone nut in an attic room could cause a drama such as the one in Nice. I envisage ever smarter software becoming available, which can be used to hack or attack systems, and this software will be as easy to use as online banking is at the present moment.

In hospitals too, for example, more and more equipment is connected to the Internet. What if the digital systems there were hacked with so-called ransomware? This is software that is capable of blocking complete computer systems in exchange for a ransom. It is terrifying to imagine somebody creating a widespread pacemaker malfunction or threatening to do so.

12. Loss of skills

We lose more and more human skills due to the use of computers and smartphones. Is that a pity? Sometimes it is and sometimes not. Smart software makes our lives easier and results in a reduction in the number of boring tasks we have to perform – examples include navigating, writing by hand, mental arithmetic, remembering telephone numbers, being able to forecast rain by looking at the sky, et cetera. Not immediately of crucial importance. We are losing skills in daily life and leaving them to technology. This has been going on for centuries. Almost nobody knows how to make fire by hand anymore, for example. In my view, it is important to wonder the following: aren’t we becoming excessively dependent on new technology in this scenario? How helpless do we want to be in the absence of digital technology surrounding us?

And, not unimportantly: given smart computer systems will increasingly understand who we are, what we do and why we do it and will offer us a customised service: isn’t it an important human skill to tolerate frustration? To be patient? Or to settle for slightly less than ‘hyperpersonalised’?

In our work, we are increasingly assisted by smart computer systems that can read emotions and the state of mind of third parties – this is already the case in customer service, for example, while experiments are being conducted in American supermarkets. To what extent are we losing the skill to make these observations ourselves and to train our antennas? Will we ultimately become less adept at reading our fellow human being in a physical conversation?

This is already the case to an extent, of course, because we increasingly use our smartphone in our communications. Has this made us less good at reading our conversation partner in a face-to-face conversation? In short, which of our skills, both in a practical and emotional sense, do we want to leave to smart computer systems? Are attention, intimacy, love, concentration, tolerance of frustration and empathy aspects of our lives which we are willing to have taken over by technology to an ever greater extent?…

In this respect, AI is a double-edged sword as a technological development: it is razor-sharp, both in terms of the potentially positive and potentially negative outcome.

Have I overlooked something or are you interested in a lecture on the benefits and/or drawbacks of artificial intelligence? Feel free to contact me.

Book Jarno as keynote speaker